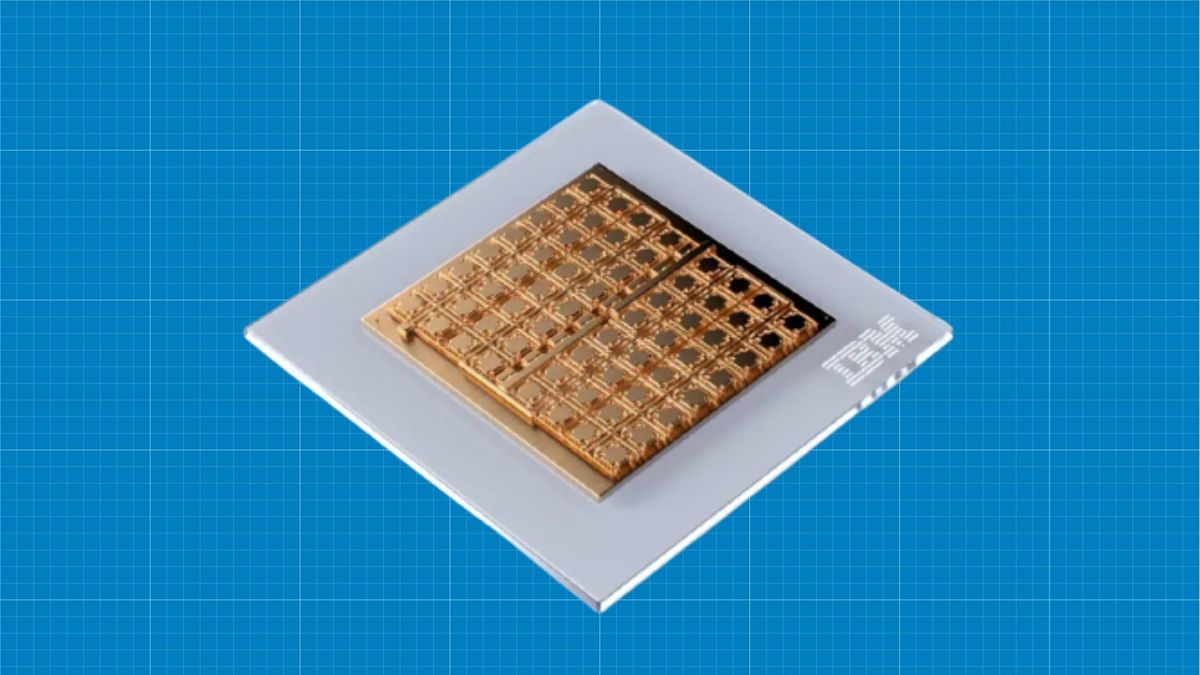

IBM claims to have cooked up a new mixed-signal part-analogue chip using a combination of phase-change memory and digital circuits that it’s claiming will match GPU performance when it comes to AI inferencing but do so at much greater efficiency.

No, we don’t entirely understand that either. But the implications are easy enough to grasp. If this chip takes off, it could put a cap on the skyrocketing demand for GPUs used in AI processing and save them for, you know, gaming.

According to El Reg, this isn’t the first such chip IBM has produced. But it’s on a much larger scale and is claimed to demonstrate many of the building blocks that will be needed to deliver a viable low-power analogue AI inference accelerator chip.

One of the main existing bottlenecks for AI inferencing involves shunting data between the memory and processing units, which slows processing and costs power. As IBM explains in a recent paper, its chip does it differently, using phase-change memory (PCM) cells to store inferencing weights as an analogue value and also perform computations.

It’s an approach known as analogue in-memory computing and it basically means that you do the compute and memory storage in the same place and so—hey presto—no more data shunting, less power consumption and more performance.

Things get more complex when you start describing the scale and scope of the weighting matrices that the chip can natively support. So, we won’t go there for fear of instantly butting up against the exceedingly compact limits of our competence in such matters.

But one thing is fore sure. AI-processing power consumption is getting out of hand. An AI inferencing rack reportedly sucks up approaching 10 times the power of a “normal” server rack. So, a more efficient solution would surely gain rapid traction in the market.

Moreover, for we gamers the immediate implications are clear. If this in-memory computing lark takes off for AI inferencing, Microsoft, Google et al will be buying fewer GPUs from Nvidia and the latter might just rediscover its interest in gaming and gamers.

The other killer question is how long it might take to turn this all into a commercial product that AI aficionados can start buying instead of GPUs. On that subject, IBM is providing little guidance. So, it’s unlikely to be just around the corner.

But this AI shizzle probably isn’t going anywhere. So, even if it takes a few years to pan out, an alternative to GPUs would be very welcome for long suffering gamers who have collectively jumped out of the crypto-mining GPU frying pan only to find themselves ablaze in an AI inferencing inferno.